| Project Type | Solo project |

| Project Timeline | 4 weeks | 2020 |

| Software Used | OpenGL, Visual Studio |

| Languages Used | C++, GLSL |

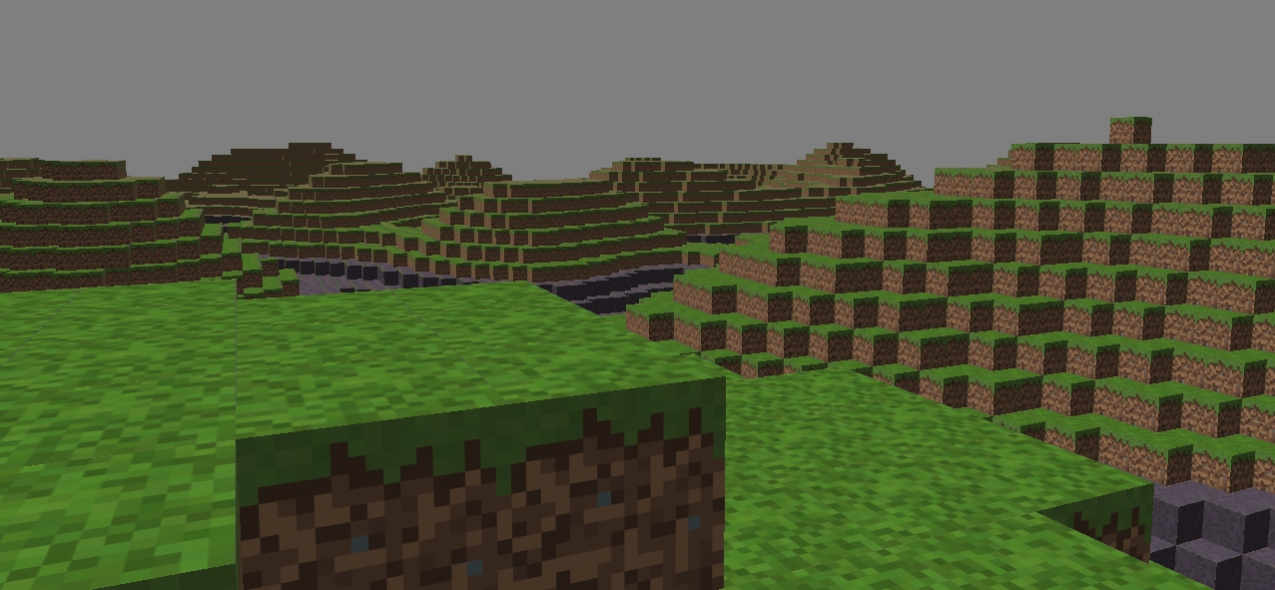

Over a period of four weeks I developed a Voxel Engine from scratch using modern OpenGL. It contains features such as camera movement/rotation, directional lighting, mesh rendering, multithreaded chunk loading and texture support. This is my first project working with C++ and OpenGL.

To render a mesh in modern OpenGL three key elements are needed, A Vertex Buffer Object (VBO), a Vertex Buffer Attribute (VBA) and a shader.

Vertex Buffer Object

A VBO is an array of bytes containing all the vertex data. This vertex data can consist out of positions, uv coordinates, normals and many other attributes. In this project I store the position, normal, color, and uv coordinate. All the vertex of a mesh will be stored in this vertex buffer.

This code snippet shows the header file of the VBO class.

#pragma once

class VertexBuffer {

private:

unsigned int m_RendererId;

public:

VertexBuffer(const void* data, unsigned int size);

~VertexBuffer();

void Update(const void* data, unsigned int size);

void Bind() const;

void Unbind() const;

};

This code snippet shows the cpp file of the VBO class.

#include "VertexBuffer.h"

#include <GL/glew.h>

VertexBuffer::VertexBuffer(const void * data, unsigned int size)

{

glGenBuffers(1, &m_RendererId);

glBindBuffer(GL_ARRAY_BUFFER, m_RendererId);

glBufferData(GL_ARRAY_BUFFER, size, data, GL_DYNAMIC_DRAW);

}

VertexBuffer::~VertexBuffer()

{

glDeleteBuffers(1, &m_RendererId);

}

void VertexBuffer::Update(const void* data, unsigned int size)

{

Bind();

glBufferData(GL_ARRAY_BUFFER, size, data, GL_STATIC_DRAW);

}

void VertexBuffer::Bind() const

{

glBindBuffer(GL_ARRAY_BUFFER, m_RendererId);

}

void VertexBuffer::Unbind() const

{

glBindBuffer(GL_ARRAY_BUFFER, 0);

}

Vertex Buffer Attribute

The VBA is used to tell OpenGL the layout of the data inside the VBO. In this case we have a

position (3 floats), normal (3 floats), color (3 floats) and uv coordinate (2 floats).

I use the VBA to tell that the first three floats of the vertex is the position, the 3 floats after that the normal, etc..

This is done inside of the Mesh class.

This code shows the Vertex struct.

struct Vertex

{

glm::vec3 Position, Normal, Color;

glm::vec2 UVCoord;

Vertex(

glm::vec3 position = glm::vec3(0, 0, 0),

glm::vec3 normal = glm::vec3(0, 0, 0),

glm::vec3 color = glm::vec3(0, 0, 0),

glm::vec2 textCoord = glm::vec2(0, 0))

: Position(position), Normal(normal), Color(color), UVCoord(textCoord)

{

}

};

The VBA is set in the constructor of the Mesh class using the method shown below.

void Mesh::SetAttribPointers()

{

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, sizeof(Vertex), (void*)0);

glEnableVertexAttribArray(1);

glVertexAttribPointer(1, 3, GL_FLOAT, GL_FALSE, sizeof(Vertex), (void*)offsetof(Vertex, Normal));

glEnableVertexAttribArray(2);

glVertexAttribPointer(2, 3, GL_FLOAT, GL_FALSE, sizeof(Vertex), (void*)offsetof(Vertex, Color));

glEnableVertexAttribArray(3);

glVertexAttribPointer(3, 2, GL_FLOAT, GL_FALSE, sizeof(Vertex), (void*)offsetof(Vertex, UVCoord));

}

Shader

To tell the GPU how to render the mesh onto the screen we need two shaders. A Vertex and Fragment shader. For this project I created a simple shader that can display textures and compute simple directional lighting.

I chose to place the vertex and fragment shader inside of the same file. The following code snippet shows the two shaders.

#shader vertex

#version 330 core

layout(location = 0) in vec4 position;

layout(location = 1) in vec3 normal;

layout(location = 2) in vec3 color;

layout(location = 3) in vec2 _uvCoord;

uniform mat4 mvp;

uniform mat4 model;

uniform vec3 lightDir;

out float diff;

out vec3 fragPos;

out vec3 objectColor;

out vec2 uvCoord;

float map(float value, float min1, float max1, float min2, float max2)

{

return min2 + (value - min1) * (max2 - min2) / (max1 - min1);

}

void main()

{

gl_Position = mvp * position;

vec3 norm = vec3(model * vec4(normal, 1.0f));

diff = map(dot(norm, -normalize(lightDir)), -1.0f, 1.0f, 0.1f, 1.0f);

objectColor = color;

uvCoord = _uvCoord;

};

#shader fragment

#version 330 core

layout(location = 0) out vec4 color;

uniform sampler2D u_Texture;

in float diff;

in vec3 objectColor;

in vec2 uvCoord;

void main()

{

vec4 texColor = texture(u_Texture, uvCoord);

vec3 result = vec3(texColor.x, texColor.y, texColor.z) * diff;

color = vec4(result, texColor.w);

};

The terrain is generated using one layer of Perlin noise. To create a mesh of the terrain only the visible parts of the cubes must be added to the mesh. Visible quads their vertex and index information is stored in vectors from the standard library. These vectors are, when calling the Mesh constructor, used to create the VBO and Index Buffer Object (IBO). The code code snippet below shows the code that creates the mesh of a terrain.

void Chunk::GenerateMesh()

{

//Empty the vertices and indices vectors

vertices.clear();

indices.clear();

for (unsigned int x = 0; x < xSize; x++)

{

for (unsigned int z = 0; z < zSize; z++)

{

for (unsigned int y = 0; y < ySize; y++)

{

//Don't call the MakeCube function if block is of type AIR

if (static_cast<Block>(GetCell(x, y, z)) == AIR)

continue;

glm::vec3 pos = glm::vec3(x, y, z);

MakeCube(pos);

}

}

}

}

void Chunk::MakeCube(glm::vec3 &position)

{

for (int i = 0; i < 6; i++)

{

//Check if face is visible

if (GetNeighbor(position.x, position.y, position.z, static_cast<Direction>(i)) == AIR)

MakeFace(i, position);

}

}

void Chunk::MakeFace(int &dir, glm::vec3 &position)

{

int nVertices = vertices.size();

GetFaceVertices(dir, position);

//Add face indices to the indices vector

indices.emplace_back(nVertices);

indices.emplace_back(nVertices + 2);

indices.emplace_back(nVertices + 1);

indices.emplace_back(nVertices + 2);

indices.emplace_back(nVertices + 3);

indices.emplace_back(nVertices + 1);

}

void Chunk::GetFaceVertices(int &dir, glm::vec3 &position)

{

Block blockType = static_cast<Block>(GetCell(position.x, position.y, position.z));

glm::vec3 color = GetColor(blockType);

const glm::vec2* uvCoords = GetUVs(blockType, dir);

//Add face vertices to the vertices vector

vertices.emplace_back(

normalizedVertices[quads[dir].x] + position + m_Offset,

normals[dir],

color,

uvCoords[0]);

vertices.emplace_back(

normalizedVertices[quads[dir].y] + position + m_Offset,

normals[dir],

color,

uvCoords[1]);

vertices.emplace_back(

normalizedVertices[quads[dir].z] + position + m_Offset,

normals[dir],

color,

uvCoords[2]);

vertices.emplace_back(

normalizedVertices[quads[dir].w] + position + m_Offset,

normals[dir],

color,

uvCoords[3]);

}

The following code shows the cpp file of the camera class.

#include "Camera.h"

#include <glm/gtc/matrix_transform.hpp>

#include <iostream>

Camera::Camera(glm::vec3 position, glm::vec3 front, glm::vec3 up)

: m_Position(position), m_Front(front), m_Up(up)

{

m_ViewMatrix = glm::lookAt(position, GetViewDir(), up);

m_LastFrame = glfwGetTime();

m_Yaw = -90;

m_Pitch = 0;

}

Camera::~Camera()

{

}

void Camera::ProcessInput(GLFWwindow* window)

{

SetTime();

CalculatePosition(window);

CalculateDirection(window);

m_ViewMatrix = glm::lookAt(m_Position, GetViewDir(), m_Up);

}

void Camera::CalculatePosition(GLFWwindow* window)

{

//Press shift to move faster

if (glfwGetKey(window, GLFW_KEY_LEFT_SHIFT) == GLFW_PRESS)

m_CurrentSpeed = m_FastSpeed;

else m_CurrentSpeed = m_Speed;

//Move using WASD keys

if (glfwGetKey(window, GLFW_KEY_W) == GLFW_PRESS)

m_Position += GetSpeed() * m_Front;

if (glfwGetKey(window, GLFW_KEY_S) == GLFW_PRESS)

m_Position -= GetSpeed() * m_Front;

if (glfwGetKey(window, GLFW_KEY_A) == GLFW_PRESS)

m_Position -= GetSpeed() * glm::normalize(glm::cross(m_Front, m_Up));

if (glfwGetKey(window, GLFW_KEY_D) == GLFW_PRESS)

m_Position += GetSpeed() * glm::normalize(glm::cross(m_Front, m_Up));

}

void Camera::CalculateDirection(GLFWwindow* window)

{

double xPos;

double yPos;

glfwGetCursorPos(window, &xPos, &yPos);

//Set prefious positions to current position if it's the first time this method is called

if (firstCursorMovement)

{

prefXPos = xPos;

prefYPos = yPos;

firstCursorMovement = false;

}

//Get the amount of mouse movement

deltaX = xPos - prefXPos;

deltaY = yPos - prefYPos;

prefXPos = xPos;

prefYPos = yPos;

deltaX *= m_Sensitivity;

deltaY *= m_Sensitivity;

m_Pitch -= deltaY;

m_Yaw += deltaX;

//Limit the Pitch to prevent the view flipping upside down

m_Pitch = glm::clamp(m_Pitch, -89.9f, 89.9f);

glm::vec3 dir;

//Calculate the rotation on the x, y and z axis.

dir.x = glm::cos(glm::radians(m_Yaw)) * glm::cos(glm::radians(m_Pitch));

dir.y = glm::sin(glm::radians(m_Pitch));

dir.z = glm::sin(glm::radians(m_Yaw)) * glm::cos(glm::radians(m_Pitch));

m_Front = glm::normalize(dir);

}

void Camera::SetTime()

{

//Get the delta time (this is used to make movement framerate independent)

m_CurrentFrame = glfwGetTime();

m_DeltaTime = m_CurrentFrame - m_LastFrame;

m_LastFrame = m_CurrentFrame;

//Print the frame rate

float frameRate;

frameRate = 1 / m_DeltaTime;

std::cout << frameRate << std::endl;

}